Building an Internal Developer Platform

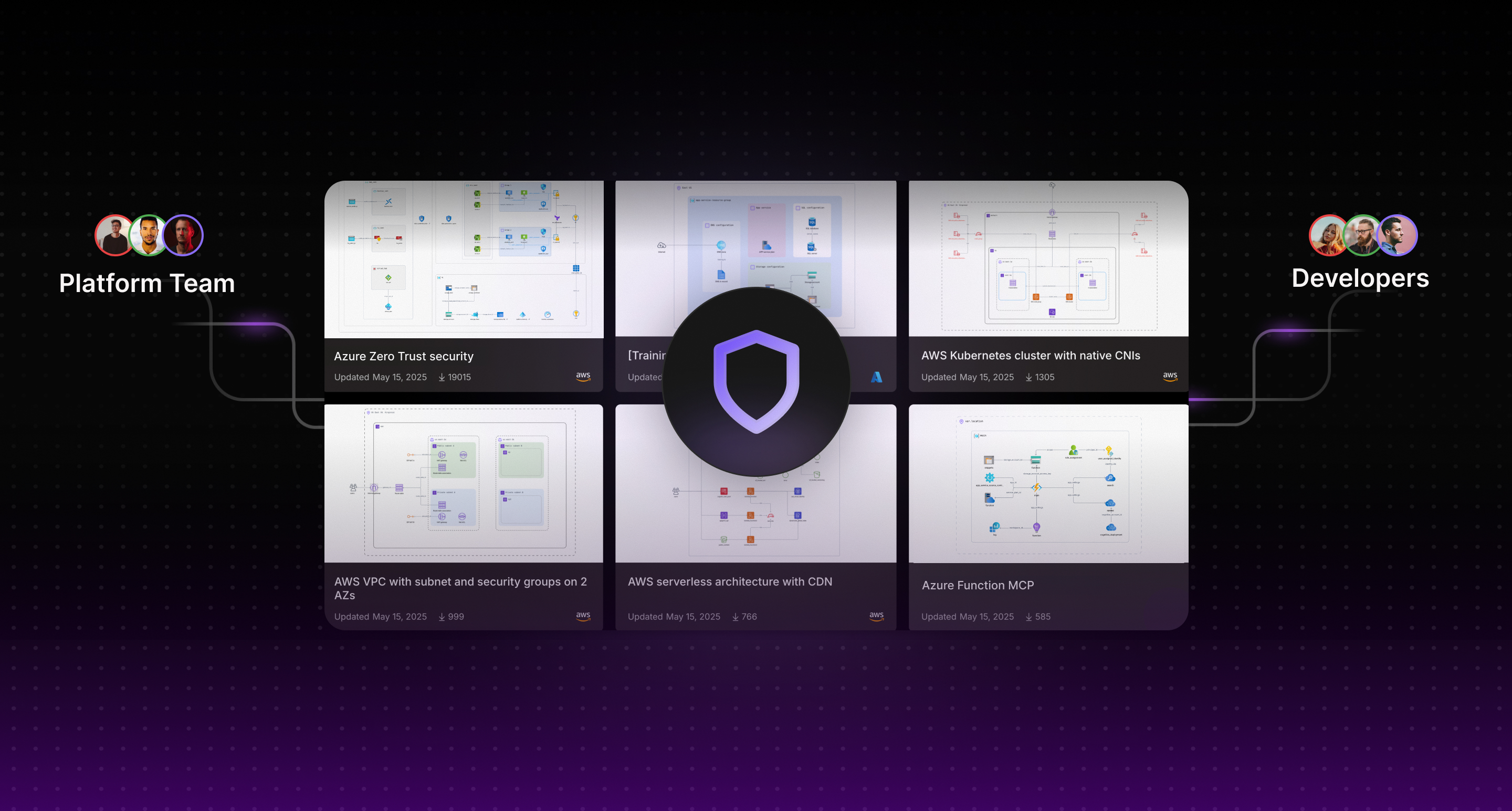

Learn how to build an Internal Developer Platform that empowers your development teams, streamlines workflows, and accelerates software delivery while maintaining security and compliance.

Discover insights, tutorials, and best practices in infrastructure, DevOps, and cloud technologies. Stay ahead with our latest articles covering modern infrastructure management.

Learn how to build an Internal Developer Platform that empowers your development teams, streamlines workflows, and accelerates software delivery while maintaining security and compliance.

Get the latest articles on infrastructure, DevOps, and cloud technologies delivered directly to your inbox.

Discover how artificial intelligence is revolutionizing enterprise cloud infrastructure design, deployment, and management for modern organizations

Master the power of Terraform expressions and functions to write dynamic, reusable infrastructure code. Learn practical examples and best practices for templating, conditionals, and data manipulation.

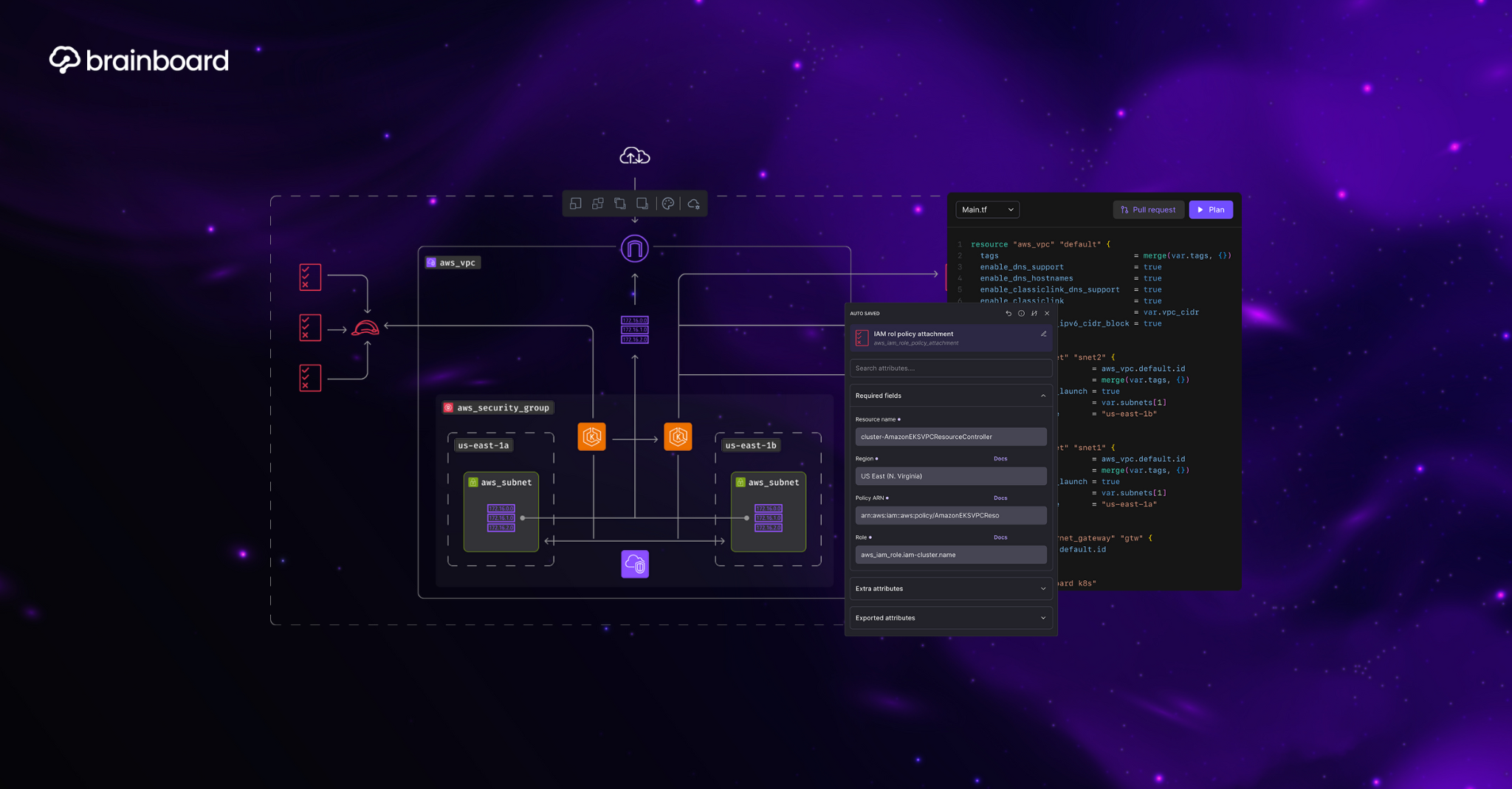

How AI-powered Terraform diagrammers are revolutionizing infrastructure and making complex cloud architectures easier to understand and manage.

Understand your Return-On-Investment when using Brainboard and how it helps you save costs and remove bottlenecks.

Master Terraform lifecycle meta-arguments to control resource creation, updates, and deletion behaviors, ensuring your infrastructure deployments are reliable and predictable

Master Terraform remote state management for secure, collaborative infrastructure automation across distributed teams and multi-region deployments

Detailed comparison between most used tools to create and manage cloud infrastructure today. Which infrastructure tool should you choose in 2025.

Learn how to handle sensitive data in Terraform with best practices for secure infrastructure management. Must read article.

Master Terraform splat expressions to simplify complex resource iterations and streamline your infrastructure as code workflows

Learn how to create multiple instances of the same configuration inside one resource with Terraform. Best practices and pitfalls to avoid.

Complete guide about Terraform state file management with proven strategies for remote storage, locking, security, and team collaboration

Master the art of writing cleaner, more maintainable Infrastructure as Code with Terraform dynamic blocks. Learn how to eliminate repetition and create flexible configurations.

Master Terraform type constraints to write safer, more maintainable infrastructure code. Learn how to leverage primitive and complex types for robust IaC solutions.

Keep up to date with best practices about using Terraform/OpenTofu modules to design future proof cloud infrastructure.

Understand how to troubleshoot Terraform deployment failures with practical debugging techniques and real-world solutions.

Master Azure Functions best practices to build robust, scalable, and cost-effective serverless applications with proven strategies and implementation techniques

Complete analysis of IaC security scanning tools that helps you better understand and pick the right solution(s) for your cloud infrastructure.

Hands-on guide about the best practices of managing multi-environments infrastructure with Terraform.

Master the art of infrastructure drift detection with proven strategies to maintain consistency, security, and reliability across your cloud environments

Master Terraform output values to streamline your infrastructure as code workflow. Learn how to extract, share, and leverage critical information from your Terraform configurations effectively.

Discover how modules revolutionize modern IaC development and infrastructure management, making your code more maintainable, reusable, and scalable.

Discover how Terraform providers work as the bridge between your infrastructure code and cloud platforms, making infrastructure automation seamless and efficient.

A comprehensive comparison of Terraform and CloudFormation to help you choose the right Infrastructure as Code tool for your cloud automation needs.

Learn how to combine Terraform data sources and functions to create dynamic, flexible infrastructure configurations that adapt to your environment's current state

Terraform changed from open source to BSL, and the community forked it to OpenTofu that is now getting more popular. Know your options with Brainboard.

Complementary guide to building scalable and maintainable infrastructure with Terraform module composition patterns and best practices.

Master the art of managing multiple provider instances in Terraform to deploy infrastructure across different regions, accounts, and environments efficiently

Easily iterate over multiple resources efficiently with Terraform's count meta-argument. Learn practical examples, best practices, and common pitfalls to avoid.

Learn how to configure and manage resource timeouts in Terraform to prevent hanging deployments and optimize your infrastructure provisioning workflows

Comprehensive guide to dynamically create multiple resources from a key value data in Terraform. Best practices, real-world examples, and common pitfalls.

A comprehensive comparison of Azure Function Apps and AWS Lambda to help you choose the right serverless platform for your applications

Master the art of using Terraform data sources to query and reference existing infrastructure resources, enabling dynamic and flexible Infrastructure as Code implementations

A comprehensive comparison of the top Infrastructure as Code tools to help you choose the right one for your cloud automation needs.

Learn how to effectively manage resource dependencies in Terraform to ensure proper creation order, prevent deployment failures, and build robust infrastructure configurations

Master AWS Identity and Access Management with proven security strategies and implementation techniques for robust cloud infrastructure protection

This article explores 21 different diagramming tools that we tested, including dedicated solutions for cloud architecture.

Terraform/OpenTofu drift is one of the biggest issues of IaC. Learn how to detect it and the best way to remediate.